Modern AI Stacks: Understanding the Layers and Value Capture Opportunities

Last Friday I was chatting with some friends, one of whom is a venture capitalist and the other a founder, about how much my entire company uses new AI tools each day. I have thought a lot about the value capture will likely occur, and was interested in their perspectives. As I started describing my perspective on value capture in AI, it became obvious that this is not widely known information, so we agreed I should write it up. The goal is help VCs and founders identify where a business idea for a company leveraging AI could capture value. This should help provide some structure around identifying or crafting potential motes, which should in turn drive better quality company creation and investment decisions.

There is a growing set of concerns about how to invest in AI startups. As Chamath suggested in the All-In podcast Ep. 124, VCs are now looking at these tools as superpowers that should enable founders to do far more with less. Because they decrease the skill gap, it should be possible to invest lower dollar value deals in a wider range of companies. This Pitchbook article suggests that unfortunately many incumbents are dominating the space right now.

Although the incumbents have done a great job building out the capabilities, I believe there is plenty of pie to go around, and that the first move advantage isn’t necessarily as great as it may at first seem.

In this blog post, I will do my best to explain the composition of modern AI stacks, exploring their key layers — infrastructure, data, models, RLHF (Reinforcement Learning in Human Feedback), and trust and safety. I will also highlight where financial value may accrue due to value capture, and explain where the existing incumbents sit in the stacks.

1. Infrastructure

AI, like all tech, needs to run on hardware and software infrastructure. This includes cloud computing platforms, specialized processors (such as GPUs, TPUs, and custom ASICs), security services, networking and storage solutions tailored for AI workloads. Most of this stuff is off-the-shelf, with the only specialised stuff generally being the GPUs. The rate of innovation here is wild, but the value capture is being dominated by a fucking tiny number of companies:

nVidia, who designs the chips (AMD is doing a great job competing here, less so ARM)

TSMC, who manufactures the chips (competitors are 5–8 years behind; or 2 hardware generations)

ASML, who builds some of the core hardware (EUV lithography) for TSMC

Value capture

Competing in the manufacturing layers here is infeasible. You’d need some rocket surgeon to come up with a novel low cost manufacturing technique, then they’d need billions of investment capital, and they’d need a background in both company building and world-class operational rigour. You can see an attempt here by a Chinese national who left ASML and tried to start up his own businesses to compete:

Chip design is super specialised. It’s kinda infeasible to do that unless you can leverage open source designs and go from there (of which there are very few).

2. Data

Data forms the backbone of AI systems. The quality and quantity of data used to train and test models significantly impact the performance of AI applications.

I think of there being 2 main layers:

Sourcing — capturing data as it’s being created, be it from the open Internet, proprietary data or data accessible by purchase from 3rd parties

Processing — annotating/tagging, data cleaning and transforming it

There are a massive number of companies that capture huge amounts of data, and do precisely nothing with it. Usually they use it for corporate dashboards and little more. There is a hidden layer of opportunity scattered awkwardly throughout the world right now, and there is going to be a digital scavenger hunt to find who has what data and how it might be leveraged.

Value capture

There is a tonne of opportunity here! Loads of different dynamics can result in any individual or company having access to unique data sets with significant moats. How many people do you think could access the world’s authoritative source of data on fighter jet fuel consumption, or the number of toilets that get clogged per year in a specific city. There are a myriad of combinations that could give the right companies unique access to data, or unique ways of tagging and processing it.

3. Models

AI models are the algorithms and techniques used to process data and generate intelligent outputs. This is the layer where the Large Language Models reside — which isthe approach that has launched this massive spike in awesome AI. They’re based on a variant of a neural network called a transformer.

Prior deep learning approaches just weren’t anywhere near as awesome. Most people were trying to make them domain-specific, and focused on optimising the data set. What researchers discovered was that pumping a fucktonne of data through a transformer that’s been well tuned is a far superior approach.

The variation in opportunity in this area is found in the approaches used for the tuning the following aspects of the transformer models:

Self-attention mechanisms — this is how the importance of different parts of the input sequence is weighed (i.e. good sentence flow)

Feedforward networks — how more complex patterns in the data are identified in a non-linear manner

Unsupervised learning mechanics— the way the massive corpus of data is trained to predict the next word or sequence of words

Stochastic gradient descent — the way the weights and biases of the neural network are tuned to minise a loss function (the difference between the predicted probability distribution of the vocabulary vs the true distribution)

So as you can see there is shitloads of tuning here that’s possible. This isn’t just a black box — there’s a tonne of research, open source stuff and proprietary stuff that can go down in model town.

AND THERE’S MORE!

LLM design allows for you to easily layer on more specialised pre-trained models on top of each other! This is where awesome sites like Hugging Face and CivitAI are dominating. I’ll use the Stable Diffusion text-to-image model as an example. It’s so fucking awesome: you can download the base SD1.5 model, and then you can download any other “checkpoint” that is a variation on that model that people have tuned for domain use cases, like anime or landscape, oil paintings or sketches. You can then download LoRAs that allow for more finely tuned options, like Studio Ghibli anime or Pokemon anime.

This means that if you want a specific AI model, the technical aspects are optional for you to engage with. As a business, you can just download open source models, layer them up in a unique way that meets your business use case, and sell your product to the world. There is a wealth of opportunity here.

Value capture

Companies developing AI models or offering pre-trained models for specific use cases are prime investment targets, as there areloads of opportunity to specialise here. Additionally, solutions that enable faster training or more efficient model deployment can attract significant financial interest. This is why Stable Diffusion is so popular with builders — you can use their products openly and easily. How they capture value is highly concerning to me at this point, but they’ve built a foundation for a tonne of innovation that can be captured by others.

4. RLHF (Reinforcement Learning from Human Feedback)

The RLHF layer involves using human feedback to fine-tune AI models iteratively. This feedback loop helps improve AI decision-making and aligns the AI’s behavior with human values, particularly in complex or ethically sensitive domains. This layer is a huge reason why OpenAI is so fucking good. They’ve created something that you can largely trust to not tell you to murder kittens or nude up in public. It’s something that you can have a degree of confidence that if you embed it in your product/service, you’re not likely to have terrible outcomes.

This is because they’ve invested a tonne in making sensible judgement calls about how they’ve attuned the AI to be super useful across a wide range of areas.

Value capture

Companies that develop tools to facilitate the integration of human feedback in AI systems could be good places to build or invest. Startups focusing on innovative ways to improve the efficiency and scalability of RLHF will be particularly attractive. This is a part that is pretty unexciting, and I believe is often outsourced. It’s also super important, so this isn’t a mindless activity, it’s just a repetitive one that requires carefully thought out parameters. Every functional model requires this layer though, so there will be room to build. I’m less sure how to navigate value capture in this layer to be honest as I’ve spent less time focusing on it.

5. Trust and Safety

This layer of the AI stack addresses the ethical, legal, and safety concerns surrounding AI. This includes mechanisms to ensure privacy, transparency, explainability, and fairness in AI applications, as well as methods to mitigate biases and prevent misuse. So it’s super fucking important. Think of this as being the police, and if it detects that the LLM is going to say something that could harm people or be easily misinterpreted, it just says “no, ChatGPT, you can’t say that to the user”, and the user gets told “Sorry, I am unable to assist you with that request”. There are loads of hilarious citations on the Internet about people avoiding it, but overall they do a good job so far.

Of course, they’re completely optional, and any day now we’re going to see ChatGPT competitors from cowboys who don’t care about ethics or privacy or the humanity’s best interest, and will just remove this layer. The implications of this for founders and investors is that there is a wide range of options for how this layer can be addressed.

Value capture

There will be development of tools and frameworks for trust and safety in AI that could prove incredibly powerful. Companies that offer unique solutions to address ethical concerns, comply with regulations, and promote responsible AI adoption will be in high demand. I can imagine culturally-aligned trust and safety layers that can be plugged into a custom-trained model to be super powerful, and a great company. Imagine doing the trust and safety layer for a south-east asian therapy AI. Enormous TAM, incredibly value to humanity, super important to get right, and potentially super profitable.

6. Application

Most businesses now being built are at the application layer, because the lower layers mentioned above are already being well served by the incumbents and serve as a great foundation for new value to be created. This layer includes:

feature engineering

integration into existing tools, workflows and services

domain-specific solutions and vertical integration

This is so fucking easy now — all you have to do is bang out some wireframes in Miro, build an app with a no-code tool, hire an engineer on Fiverr to set up your integrations and hit the OpenAI APIs and you’ve built an AI app, and it’s probably awesome.

That ease to create is the part that has VCs and founders alike concerned though, so let’s talk…

Value capture

This is now the easiest layer to disrupt. Yesterday I built a new product site in 8 minutes. I asked ChatGPT to give me a business name, then punched that into an AI website builder, which took 1 minute to create an amazing website, then I went and bought the domain, hooked it up, and BAM:

https://www.pathweaver.ai

is born. Literally, less than 10 minutes. From here, I started using AutoGPT to do my market research, figure out the main goals for the product, and it just started walking through the design and build of the app. You can literally just build apps semi-automated now.

So yeah: you have to be careful about investing or building at this layer. I was super excited to build my Pathweaver app, but in the basic form the moat is terrible. If it’s super easy to build, that’s great, but it means there will be a massive influx of competitors the second you have any success, then there will be a race to the ad platforms and it becomes a spending game. It’ll definitely work for some, but without understanding how hard value capture is at the application layer, you’re in danger of wasting your time and/or money building or investing on companies that are exclusively focused on this layer.

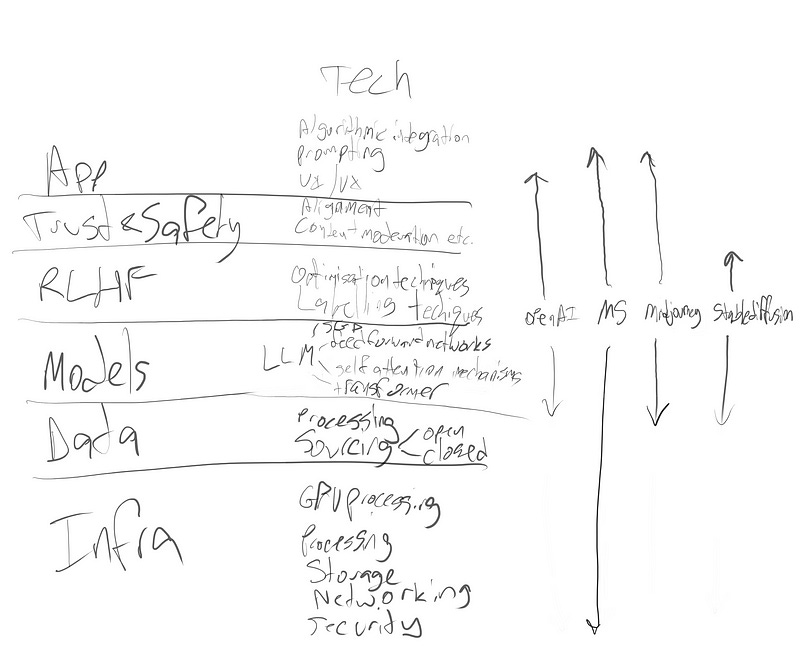

The AI stack — bringing it together

Here’s a basic diagram of the stack. If you want me to do an analysis of which layers of the stack various companies engage with, ask in the comments section!

Conclusion

Understanding the composition of AI stacks is super important for VCs or founders looking to invest in or leverage AI. If you don’t you risk being smashed by a lack of moat that you know was absent. By exploring the various layers whilst you design your business or investment thesis, you can identify potential value capture opportunities, which will greatly de-risk your choices.

I think a lot of people are going to place huge value on incumbent businesses that leverage AI to create a further moat within their products due to previously accumulated data. I believe this is the safest investment thesis for VCs at this stage.

For founders, I recommend considering differentiating at the Data, Model and RLHF layers, as that’s where the largest possible moats for new products exist IMO.

If you want to discuss further, reach out or ask in the comments!